— By Dr Glen C. Collins

Glen C. Collins recently directed the Applied Artificial Intelligence Ireland Forum & Technical Conference held in Kilkenny in March 2019. He is a Chartered Engineer, regional committee member and volunteer with Engineers Ireland. He holds bachelor’s and master’s degrees in Electrical Engineering and a master’s Certificate in Acoustical Engineering from the Georgia Institute of Technology and has a PhD in Artificial Intelligence from Vanderbilt University. He is currently a Kilkenny-based consultant in professional audio, satellite communications, and software engineering, and an Associate Lecturer at IT Carlow.

This article is an attempt to shed some light on the topic of Artificial Intelligence (AI) and to highlight what’s important to know about it. Links to further resources may help you keep up with this growing technology.1

Every week, the news media features articles about some aspect of artificial intelligence (AI). This flow of seemingly urgent information can be overwhelming and bewildering with authors contradicting one another and some making dire predictions about the future. To make matters worse, there is so much written about AI that it has become difficult to distinguish genuine science from science fiction.

Although brief, this article is an attempt to shed some light on the topic of AI, and to give you the highlights – what’s important to know – about AI, with some links to further resources that can help you keep up with this growing technology.1

John McCarthy, considered by many to be the father of AI, originally coined the term artificial intelligence in 1956 and defined it as “the science and engineering of making intelligent machines, especially intelligent computer programs.”

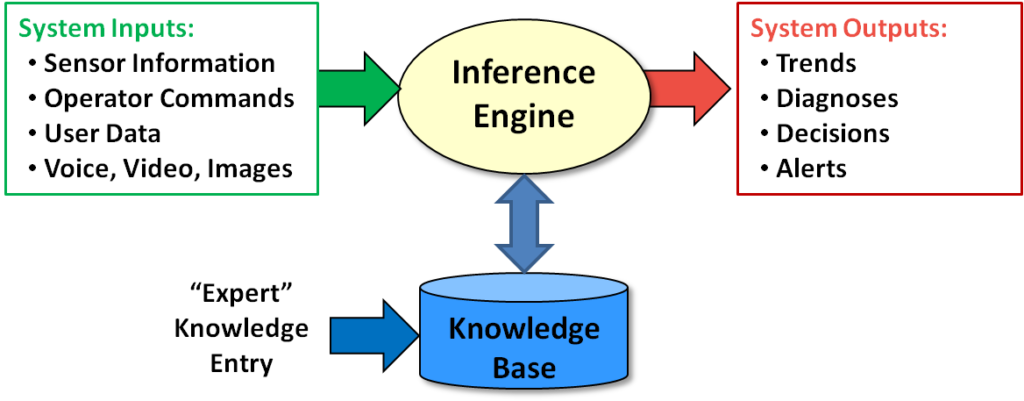

An AI computer program differs from other computer software by having three separate components (see Figure 1.1):

Figure 1.1 – Traditional AI Systems Have 3 Components.

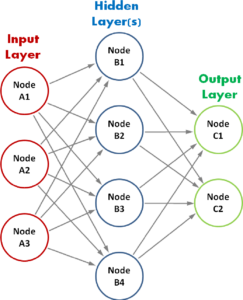

Figure 1.2 – Neural Networks Use Layers of Interconnected Computing Nodes.

In traditional AI, the knowledge provided by human experts is stored as patterns of various kinds. These patterns draw from many computational techniques: rule-based reasoning, forward/backward chaining, case-based reasoning, fuzzy logic, probabilities, and neural networks.

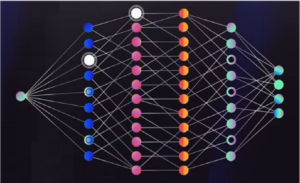

More recently, successful and commercially-useful AI programs have been built using ‘deep neural networks’ (DNNs). As illustrated in Figure 1.2, neural networks are multi-layered interconnected groups of computing code (called ‘nodes’) each of which performs a specific function and stores its own data settings about its connections (called ‘links’) to other nodes. This arrangement combines the traditionally separate knowledge base with the inference engine; knowledge may be stored as probabilities and weights for each link to adjacent nodes. A neural network is ‘deep’ when it has many layers of nodes (at least 4 or 5, typically more as shown in Figure 1.3).

DNNs are almost exclusively used for Machine Learning (ML) where we no longer extract knowledge from human experts but instead use algorithms that can learn from data – a lot of data. In ML the system is “trained” using massive sets of associated inputs and outputs (called Supervised Learning where, for example, photos (input) are labelled “a cat” (output data).

Figure 1.3 – Deep Neural Networks Have Multiple Hidden Layers. Image Credit: Bloomberg Businessweek

The AI system “learns” by generating stored values that match these data pairings to represent the patterns contained in the training data.2 Once trained, the AI system uses the stored ‘knowledge’ to ‘recognize’ similar patterns in real-world situations, thereby exhibiting intelligent functionality. Other forms of ML include Unsupervised Learning3 (pattern extraction) and Reinforcement Learning.4

Beginning in ~950 BC with Yan-Shi’s automaton, ~100 BC with the Antikytheria ‘computer’ (Figure 2.1) and in the first century with Heron of Alexandria’s automata, inventors have attempted to create machines that could exhibit intelligent behaviour. Modern AI research formally began in 1956 with a summer workshop for computer scientists at Dartmouth College, and spent the next 55 years cycling wildly between brief successes (e.g. winning at games such as chess, Go) followed by periods of disfavour (called ‘AI Winters’).5

Figure 2.1 – The Antikytheria mechanism was an early ‘intelligent’ computer

because it could predict sun, moon and planetary movements.

As an educator, it is important to recognize the underlying reasons for AI’s repeating pattern of ebb and flow, as it is likely to recur again and again. Historically, when a successful breakthrough is achieved, the resulting excitement becomes coupled with a tendency to over-promise and over-predict what will soon be possible to do with the new form of AI (some call it ‘hype’). Unfortunately, previous AI breakthroughs have been followed by disappointment and de-funding when the overly optimistic predictions could not be realized. Additionally, what constitutes machine ‘intelligence’ has suffered from the AI Effect where onlookers discount the behaviour of an AI program by arguing that it is not real intelligence.6 This “moving the goalposts” occurs when a new AI development has suffered from the AI Effect becomes accepted as routine (e.g., optical character recognition used to be considered machine intelligence, but is now merely machine automation).

The AI Effect is clearly visible in present-day debates about the Singularity7 (where machine AI reaches an alleged point when it could learn and grow on its own, outstripping human capabilities) and the creation of the term Artificial General Intelligence (AGI). AGI, wherein a machine is claimed to be actually thinking and reasoning, is distinguished from the current wave of DNN implementations which some see as mere pattern recognizers.8

So what’s different this time around? AI’s current popularity is a result of AI’s first large-scale commercially deployable development: machine learning via deep neural networks using massively parallel computers (Figure 3.1) and huge data sources. Sixty years of research finally have yielded AI-derived programs that can be used by a wide range of businesses for products and services that generate more money than the up-front costs of developing and maintaining the AI program.

Figure 3.1 – HPC4 in Milan is a typical high- performance computer using thousands of CPUs and GPUs enabling deep neural networks to be trained by millions of input data pairs.

Today’s DNN-based AI programs are finding wide applicability9 in areas such as natural language processing, speech recognition, machine vision, image recognition, process control, autonomous vehicles/aircraft, medical diagnosis, pharmaceuticals design, and financial analytics, to name a few.10 Because the DNN implementations ‘learn’ from training data (and, increasingly, from live data streams) it is easier to maintain them with updated data. Note that pattern recognition is the key capability enabled by DNNs, but these systems do not represent any forward progress toward AGI because there is no mechanism to support ‘reasoning’ or decision strategies.11 Will this popularity be sustained? …. We’ll have to wait and see.

A Few Names to Know

Significant original ‘founders’: Frank McCarthy, Frank Rosenblatt, Marvin Minsky, Paul Simon

Contributors: Lutfi Zadeh, Judea Pearl, Rodney Brooks, Ray Kurzweil, Nick Bostrom, John Hopfield

Deep neural network developers: Fei-Fei Li, Geoffrey Hinton, Andrew Ng

For further introductory reading, consider these links:

An introduction to Artificial Intelligence by Arun C Thomas: https://hackernoon.com/understandingunderstanding-an-intro-to-artificial-intelligence-be76c5ec4d2e

Introduction to Artificial Intelligence – What is AI and Why AI Matters: https://dataflair.training/blogs/artificial-intelligence-tutorial/

Free Online course “The Elements of AI”: https://www.elementsofai.com/

Introduction to AI: https://www.researchgate.net/publication/325581483_Introduction_to_Artificial_intelligence

Free online slides: https://www.slideserve.com/JasminFlorian/introduction-to-artificial-intelligence

Great article about AI in Ireland: https://www.forbes.com/sites/alisoncoleman/2018/08/25/howireland-is-fast-becoming-the-ai-island/#67239d796121

Topbots: https://www.topbots.com/

Science News: https://www.sciencenews.org/article/nine-companies-steering-future-artificialintelligence

MIT Technology Review: https://www.technologyreview.com/magazine/2017/11/?fbclid=IwAR02UonES0Bk66TK2KfGe_iB7Zy s4DkCDMsJi7qNuuEo5P8Dz6PA9d-S7nU

Applied AI Ireland Forum & Technical Conference: https://ai2.ie

AI Summit Conference: https://www.aisummit.ie

NVIDIA AI Articles: https://blogs.nvidia.com/blog/2016/07/29/whats-difference-artificialintelligence-machine-learning-deep-learning-ai/

End Notes: